Probing For Clarification – A Must-Have Skill For Level 3 AI Assistant

Typically, there are 5 levels of Conversational AI and Level 3 is understood as contextual assistants, where the user no longer needs to know how to use the assistant or follow the pre-designed conversational flow.

Although building contextual assistant remains an overarching goal, most virtual assistants get consumed in the greed of answering as many user queries as possible. This often deviates them from focusing on key elements of two-way communication which are essential for a successful conversational experience.

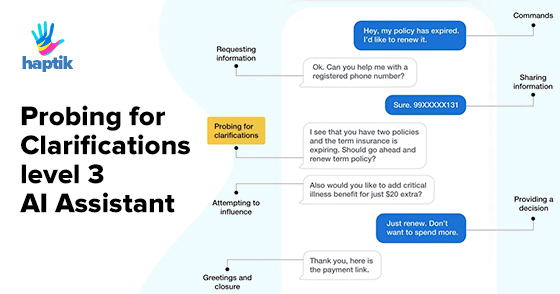

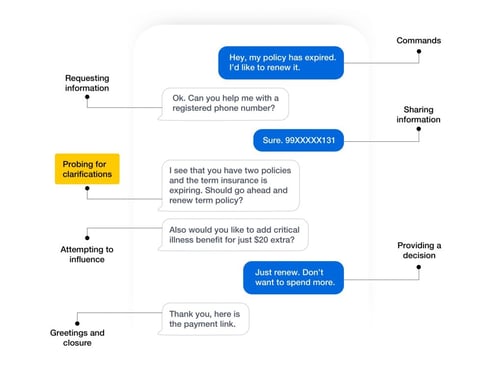

To understand this better, let’s take a look at some key dialogue exchanges between humans. They can be broadly categorized into one of the following categories:

This blog would primarily highlight a few scenarios which explore AI assistant’s capabilities to ask post-prediction probing questions before proceeding further in the conversation. We will further dive into building blocks that need to be connected in order to build a robust probing system.

Why is probing an important skill for an AI assistant?

Active listening, mutual understanding, and trust are key aspects of reliable human conversations. Any gap in understanding would risk trust and credibility which can ultimately make or break the overall experience. Hence, in order to build mutual understanding and common grounds, it is essential and acceptable for humans to ask each other for clarification during conversations.

This activity of asking for clarification is referred to in this blog as probing the user and objective of probing is to help an AI assistant achieve the following:

- 1. Granular understanding of user’s intention

- 2. Building credibility and reliability

- 3. Influence the next action taken by the user

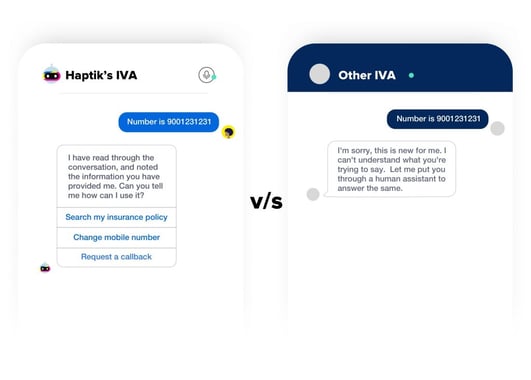

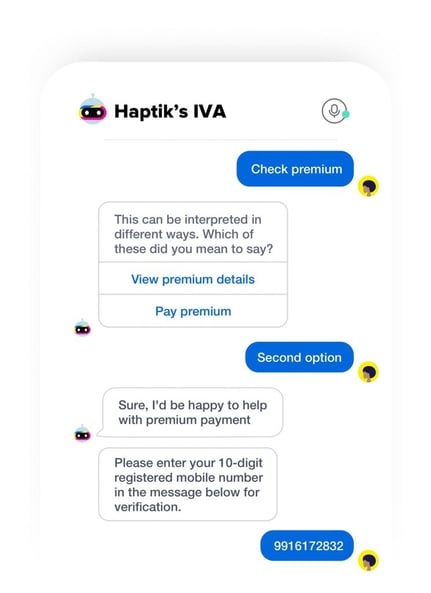

The following image tries to capture the difference in end user experience with and without probing.

What is required in order to build accurate probing system?

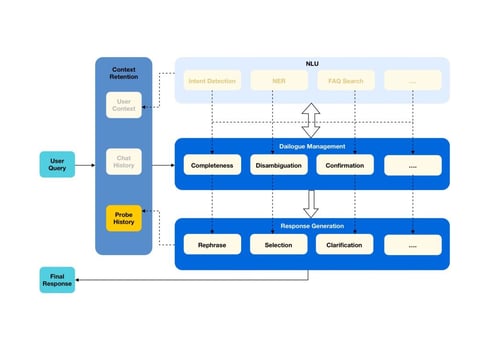

Gateway to building smart probing components in conversation flow opens up with the following checklist of building blocks.

1. Configurable Dialogue Management – Dialogue manager acts as a controller for most conversational AI systems. In order to train a good dialogue policy, availability of high quality chat transcripts is a necessity. In many cases, sufficient number of transcripts may not be available or they might not meet the quality standards needed for best user experience. Hence, well engineered, abstract and configurable dialogue state tracking becomes the key to orchestrating complex conversations. Two fundamental capabilities needed in the dialogue manager to enable probing are mentioned below:

- a. Recognise the need for clarification raised by any underlying NLU component.

- b. Identify appropriate NLG mechanism and trigger appropriate response generation needed for clarification

Connecting dots of Conversational AI

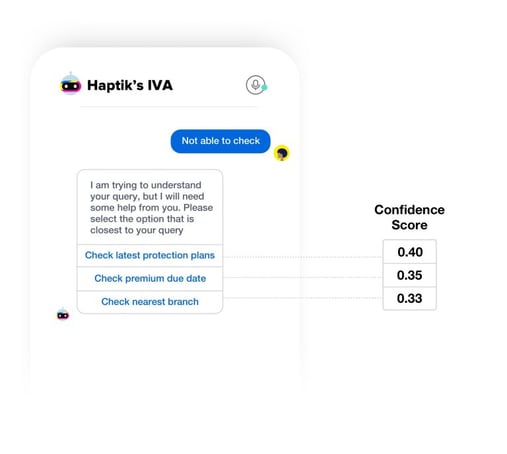

2. Accurate prediction of uncertainty –Deep learning models give confidence score for every prediction they make. While there is always enough focus on correctness of predictions,quantifying uncertainty in a predictable and reliable way is also essential to achieve sensible probing.

For example, if a model always predicts the score in the range (0.9, 1) for True Positives and (0,0.1) for True Negatives and does not predict anything reliable in between the range (0.1, 0.9), then it cannot raise the sensible requirement of the probe. The following are examples of a couple of components and how their scores can be used to probe and reduce ambiguity in conversation:

- a. Voice to text – There are cases when the score provided by voice to text model is lower than the predefined threshold and noise corrections are not available. It is fair to trigger a probe mentioning ‘Your voice is not clear, can you speak again?’ instead of processing noisy text.

- b. Intent detection – In some scenarios, the intent detection model identifies multiple intents having similar scores or a single intent with a score near the boundary region. In such a scenario, it is better to reconfirm from the user before responding hastily.

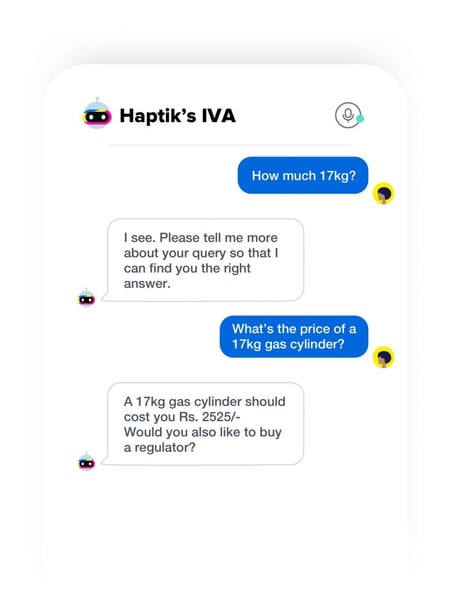

3. Granular understanding of user queries – User query could be vague, incomplete and might have some hidden underlying domain-specific assumption. It’s important to understand the completeness of the query and in case if there are any assumptions or coreferences which the assistant can not resolve, it’s fair to ask the user for clarifications. You can check out a comprehensive article to dive deeper into understanding of user queries.

4. Reasoning based response pipeline – An abstract response generator which can act on the input of Dialogue manager and support easy integration of multiple curated and automated NLG components is essential for an end to end probing mechanism.

5. Context retention from probe history – Post probing, it’s important to resolve coreferences and retain the context of the chat. Hence, a component that can interpret probe history and transform the relative user query into a resolved query is a must-have for continuing the conversation.

Conclusion

Regardless of the progress in Machine Learning Algorithms and availability of Training Data, boundary cases are here to stay in the near future. Hence, it’s important for an AI assistant to deal with them gracefully and accurately.

Most importantly, “What is the probability of the user dropping off against responding back to the clarification”? At Haptik, we’ve seen that close to 90 percent of the users respond back when accurately probed for clarification. This in-turn also provides us a rich channel of data to improve our virtual assistants and make user experiences better in the future. We believe that there is still a lot that AI assistants need to learn in order to understand users granularly and we will continue encouraging more research in this direction. You can also read more about our probing module ‘Smart Assist‘ in order to understand the business impact of the same.