GPT-4o Mini and Llama 3.1 8B: Powerful and Cost-Effective LLMs for Enterprise at Scale

Since the release of ChatGPT, multiple trends have emerged in the bustling world of generative AI. The range of large language models (LLM) continues to expand, with specialized models for specific business requirements. GPT-4o Mini, released by OpenAI, and Llama 3.1 8B by Meta stand out as highly-efficient models that offer more advanced natural language understanding (NLU) and generation capabilities, enabling more human-like and context-aware conversations. They provide robust capabilities for task automation, streamlined customer service, and data-driven decision-making, making them great choices for enterprises to enhance their operations without incurring high expenses.

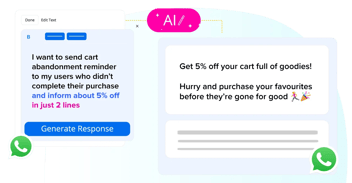

Explained: How to Craft Effective Prompts for Enhanced LLM Responses

Cost-Effectiveness

OpenAI has provided developers the option to fine-tune GPT-4o Mini, with free access to the first two million tokens daily until September 23rd. Fine-tuning allows developers to customize the model for specific applications without incurring additional costs. Additionally, GPT-4o Mini requires fewer parameters to process information, significantly reducing computational requirements and operational costs for enterprises. It’s available with the global pay-as-you-go deployment option with high throughput limits and, in comparison with GPT-3.5 Turbo, it’s 60% more economical.

On the other hand, Llama 3.1 8B’s hardware-based pricing can be more economical than token-based models at scale. Its cost efficiency allows enterprises to allocate resources more effectively, streamlining operations.

Enhanced Efficiency

GPT-4o Mini’s higher efficiency is particularly useful for real-time applications. The model’s compact and speedy architecture allows for rapid processing of tokens, enabling enterprises to efficiently handle high volumes of data and interactions. Businesses can respond to customer queries, process transactions, and glean insights in real-time, leading to agile implementations and better business outcomes.

Llama 3.1 8B can be fine-tuned to handle specific enterprise tasks with high accuracy. This capability ensures that the model delivers precise results, reducing the need for manual corrections and interventions.

Improved Customer Experience

GPT-4o Mini’s ability to deliver real-time responses at scale enhances the quality of interactions, leading to better customer experiences and efficient customer handling. It improves query resolution time and streamlines support processes by providing accurate and context-aware responses swiftly.

Similarly, Llama 3.1 8B’s 128,000 token context window offers the foundation for comprehensive and nuanced conversations, elevating support experiences for customers. It enhances personalization by remembering details of previous interactions with the same customers and delivers more context-aware responses. Llama 3.1 8B supports multiple languages, making it ideal for applications that need to cater to a global audience

Reliable and Scalable

Llama 3.1 8B can be deployed in air-gapped settings, ensuring maximum data protection and compliance, reducing the risk of data breaches and ensuring sensitive information is handled securely. Meanwhile, GPT-4o Mini, trained using OpenAI’s instruction hierarchy method, has the same in-built safety mechanisms as GPT-4o but offers significantly higher resistance to jailbreaks (up to 30%) and improved protection against system prompt extraction (up to 60%).

With data security and privacy being paramount, GPT-4o Mini allows enterprises to deploy their AI applications more confidently knowing sensitive information is secured and complies with regulatory requirements.

Both GPT-4o Mini and Llama 3.1 8B’s frameworks support seamless scaling of applications, allowing enterprises to efficiently manage increased workloads without compromising performance. This scalability ensures that as business demands grow, the AI infrastructure can adapt and continue to operate efficiently.

Implementation Strategies by Haptik

At Haptik, we’re excited to leverage these compact LLMs to design lightweight applications that allow businesses to innovate and unlock growth in a competitive market.

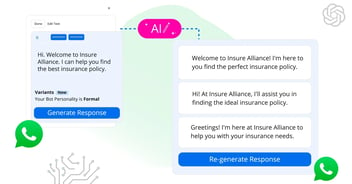

- Integrate models into AI assistants: Both GPT-4o Mini and Llama 3.1 8B can be integrated into Haptik’s chatbot framework to enhance the NLU and natural language processing (NLP) capabilities, providing more accurate and contextually-aware responses.

- Fine-tuning for specific use cases: We utilize the fine-tuning capabilities of these models to tailor them to specific industry needs such as eCommerce, healthcare, and customer service. This will help in providing more personalized and relevant customer interactions.

- Hybrid deployment: We aim to implement a hybrid approach where simpler queries are handled by smaller models, and complex queries are escalated to GPT-4o Mini or Llama 3.1 8B. This will optimize resource usage while maintaining high-quality responses.

Related: [Download] The CXO's Guide to Enterprise Gen AI Adoption